MosaicML

Putting large-scale machine learning models within reach for more companies

The 2025 edition of the DCVC Deep Tech Opportunities Report, released in June, explains the global challenges we see as the most critical and the possible solutions we hope to advance through our investing. This is a condensed version of the report’s second chapter.

The makers of today’s frontier AI models say that to make their systems smarter and achieve artificial general intelligence (AGI) and eventually superintelligence, they may need to build computing facilities that are 50 to 1,000 times the size of today’s data centers. Such facilities would have correspondingly greater power needs — which is why these companies are already looking to new energy sources such as advanced geothermal and nuclear power. But before AI providers commit to such a massive scale-up, the industry must grapple with the interlinked problems of energy efficiency and algorithmic efficiency.

It’s become an article of faith among technology policy experts that massive new investments in computing hardware are essential for U.S. competitiveness. We agree — but we think it’s time to get smart and creative about where to find the electricity and water needed to power and cool all this hardware, and how to moderate the industry’s overall energy needs. Before companies spend billions or trillions of dollars on new data centers and power facilities, they should be clear about their reasons for choosing to invest in AI over other valid priorities, and they should think in advance about ways to minimize these technologies’ environmental impact.

One of the big news stories in the AI world as we were writing this in the spring of 2025 was the emergence of a Chinese AI startup, DeepSeek, whose open-source R1 reasoning model performed as well as or better than ChatGPT-4o and other frontier models available at the time, but was reportedly trained for a tiny fraction of the money and energy poured into the leading U.S. models. It is too early to tell whether DeepSeek’s innovations are original or whether its cost claims are accurate. But the surprise from China did open up an opportunity to “take stock of unrealized efficiency gains in AI compute before we end up building a bunch of 30-year gas plants,” Arvind Ravikumar, co-director of the Center for Energy and Environmental Systems Analysis at the University of Texas at Austin, commented on Bluesky. We believe the best reason to invest more in AI infrastructure and R&D here in the United States is not to secure a stranglehold on the technology, but to ensure that AI develops in ways that are safe, equitable, and energy-efficient. There will always be room for startup-based innovation and venture-scale investment in the field — especially, we believe, in technologies that could supply more computing capability per megawatt.

That’s what DCVC-backed MosaicML, which is now part of another portfolio company called Databricks, enabled with a platform that allows organizations to train their own generative AI models two to seven times faster than conventional methods. It’s also what Q‑CTRL is delivering with its error-suppression software for quantum computers. Q‑CTRL, which we’ve backed since 2018, is helping quantum computing leaders like IBM deal with qubits’ stubborn vulnerability to noise, which can cause them to lose the quantum superposition that’s key to their huge computing capacity. The company’s software detects which qubits in a computation are producing errors and helps operators apply corrections by adjusting the control pulses that manipulate each qubit. Similar error-mitigation methods allowed IBM to prove in 2023 that a 127-qubit circuit could run atomic simulations faster and more precisely than a conventional supercomputer. Error correction opens a potential era of “quantum advantage” in which even noisy quantum computers can provide real value in the near future.

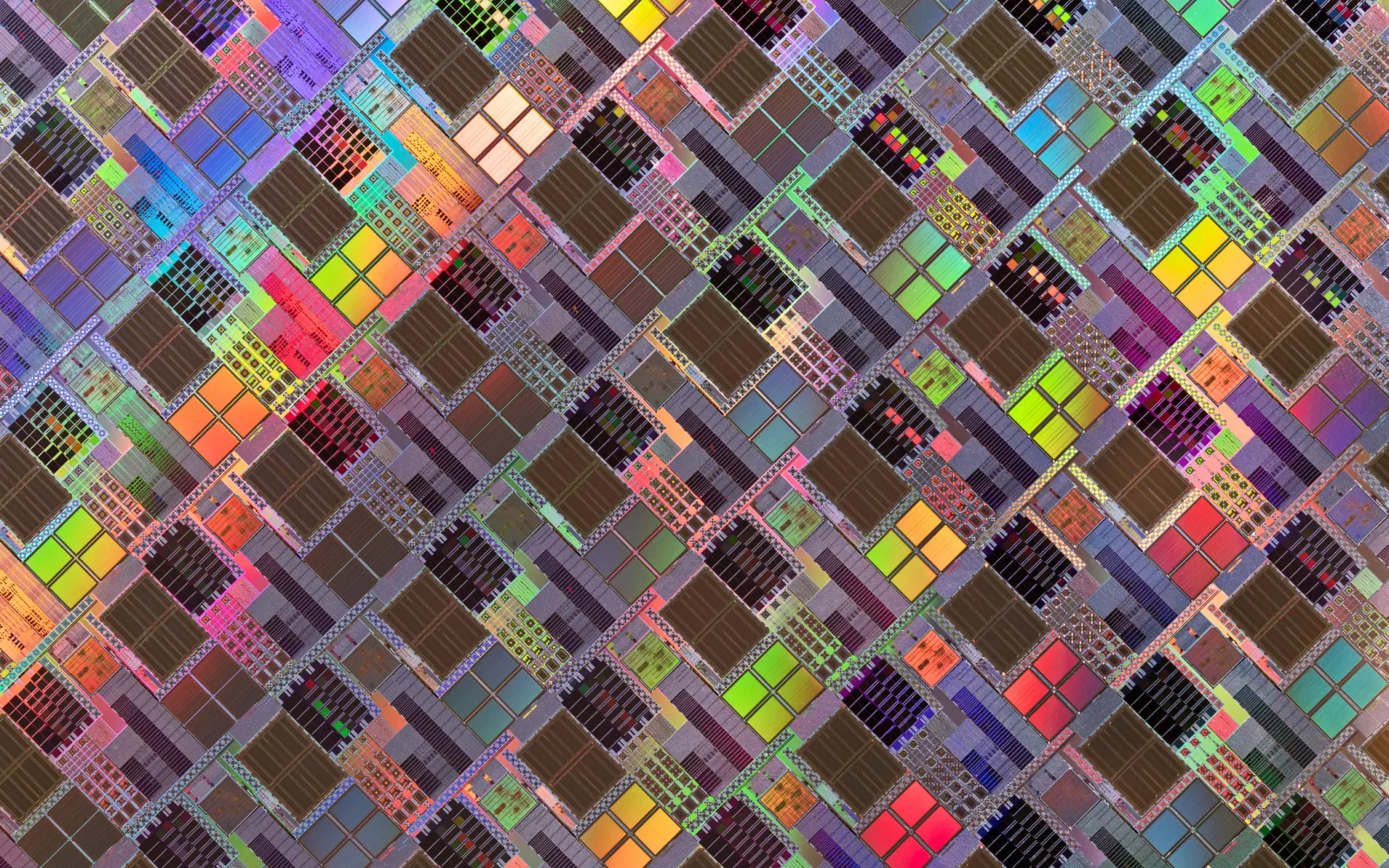

Long before we’ve worked out all the kinks in quantum computers, however, we’ll need to reduce the power demands of classical microchips. That’s long been the focus at Mythic, a maker of analog matrix processors that we’ve backed since 2016. In a traditional digital computer, the parameters used in the training and inference phases of a generative AI program, such as a large language model, are stored in memory and must be bused back and forth to a CPU or GPU for processing. In Mythic’s chips, parameters are stored directly in the processor, which makes AI computations 18,000 times faster and 1,500 times more energy-efficient. In fact, the more parameters in an AI model, the greater the advantage of Mythic’s chips over conventional GPUs, according to Mythic CTO and co-founder Dave Fick. “Our early analysis has indicated order-of-magnitude improvements in each metric of cost, performance, and power over Nvidia and leading LLM startups,” Fick writes.

“If the urgency here is for America and the free world to have more compute in more places that works faster and for less power, then we have a whole buffet of options,” says DCVC’s Ocko. “It could be next-generation chip architectures like Mythic. It could be algorithmic innovations like MosaicML. Or it could be quantum, which is intrinsically a billion to a trillion times more power-efficient per element than conventional chips.” AGI itself may or may not be achievable in the near future — indeed, we’ve argued in the past that it’s a shiny object distracting from more transformative work (see p. 24 of our 2024 Deep Tech Opportunities Report). But either way, the U.S. will need more, faster, and greener computing hardware, and that’s the conviction guiding our investment in this area.