Mythic

Power-efficient AI hardware for distributed devices

A couple of years ago, DCVC hosted a panel featuring the eminent Dr. Beate Heinemann, the Luis W. Alvarez Chaired Professor of Physics at UC Berkeley, who heads one of the most important experiments at CERN’s Large Hadron Collider (LHC).

She talked about how ATLAS, the experiment she ran, needed to deal with up to 600 million events/second, at 1 Megabyte of raw data per event. She noted that CERN had deployed FPGAs (pretty expensive chips) to run data-reducing signal process and ML algorithms at the point of detection, i.e., the edge of the network. It was a fascinating insight from a brilliant woman who leads an amazing team.

Later, DCVC had the privilege of being an anchor investor in Nervana Systems, which delivered chips and systems that massively improved the AI compute performance/watt envelope, surpassing even Nvidia’s future roadmap.

Nervana was so disruptive that Intel acquired them before they could even start selling their first chip. Their innovation was that they could reduce data-center sized AI requirements to a handful of racks or even to a box in the trunk of your car. Part of Nervana’s success was the ability to do AI compute on massive amounts of data more quickly and energy efficiently than anything else. Thus, Nervana was able to deliver breakthrough performance for both the training of today’s AI systems (involving immense training data sets for various forms of neural networks to build AI models) and the evaluation work (the execution of those models against live data, also called inference) of those AI systems.

But the inference process for AI systems (the execution of those painstakingly created models) has some interesting potential asymmetries. An embodied, fully-trained neural network can be more compact, from a computer memory perspective, than one being trained— sometimes radically so. The program operations — and consequently the type and power profile of the semiconductor transistors — to evaluate a neural network (to execute or run the trained-up model) can be different in a way that saves a lot of power.

This is where Mythic (formerly Isocline) comes in.

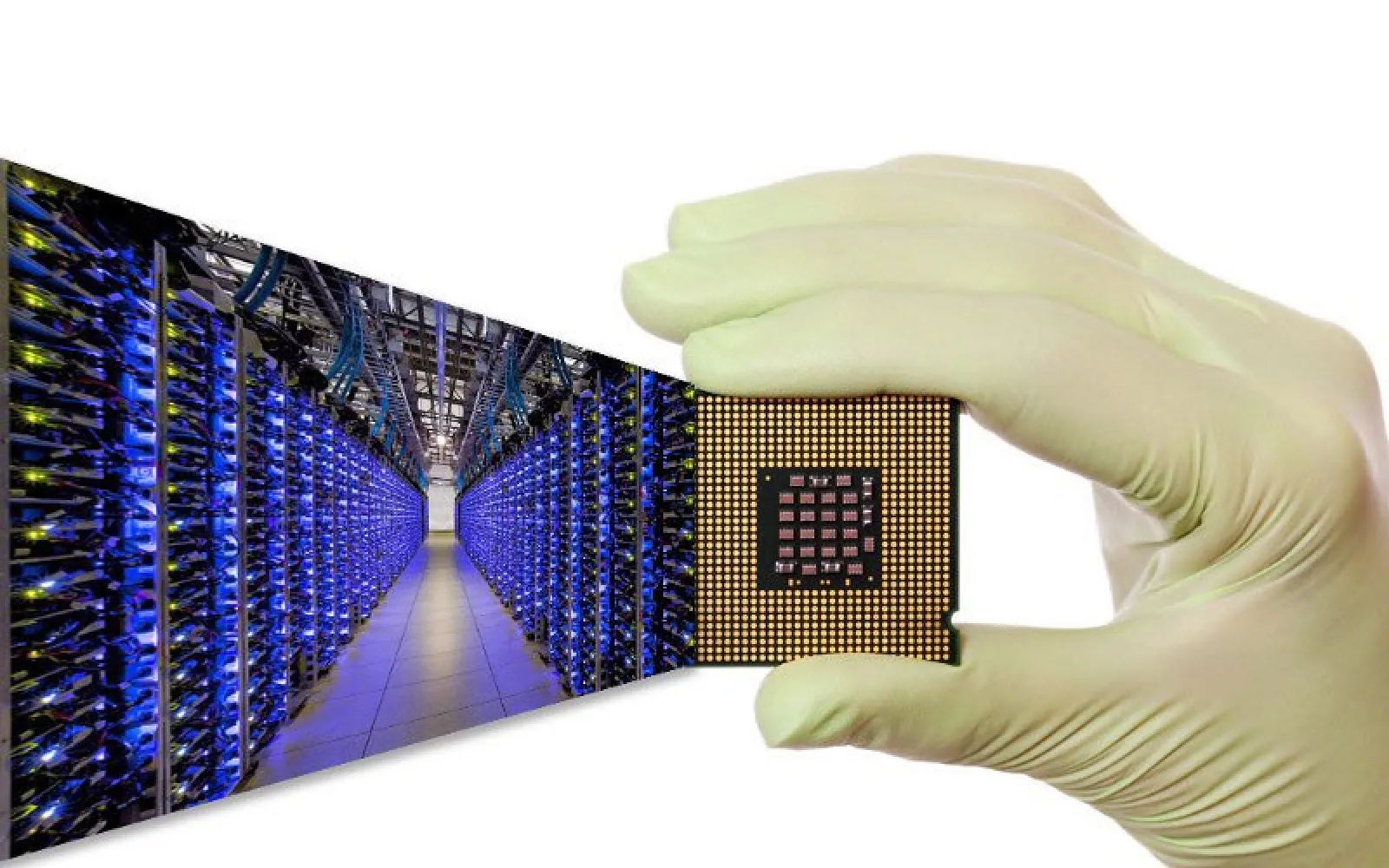

Mythic was born out of the University of Michigan Integrated Circuits Lab in 2012, a lab known for its world-class low-power chip design and multiple venture-backed spin-outs. Co-founders Mike Henry and Dave Fick developed a new deep learning inference model — based on hybrid digital/analog computation — that eliminates costly processors and transfers the deep learning computations to the memory structures storing the algorithm parameters, all while extending battery life by 50x or more.

This design allows the company to essentially put high-end desktop GPU compute capabilities onto a module the size of a shirt button, able to run on a watch battery for years. Mythic’s approach also scales seamlessly, enabling data-center-scale compute capabilities to fit into a card-deck sized platform that can likewise run on a lithium-ion battery for years. This scalable AI compute, deployable in anything from a smart birthday card that interacts only with the recipient to industrial IoT devices in the field using AI for precise systems control and hacker defense, offers a new level of trust and security to the growing number (soon, billions) of smart devices deployed at the edge.

AI and deep learning are transforming human-like tasks such as speech, vision, language processing, threat detection, and hardware control. They are also becoming an integral part of connected devices. However, at a time when embedded devices — from mission critical control systems for power plants to children’s toys — are being hacked, it is increasingly important to untether the AI in these devices from the cloud. Mythic’s local AI platform is inherently more secure, responsive, seamless, and easily integrated than “cloud AI.” Thus, embedded systems companies, handheld and consumer device makers, security platform, and monitoring companies view this product as transformative for their products.

Mythic will initially target markets including sophisticated healthcare systems, security and monitoring for commercial and home use, and IoT edge systems and drones for industrial applications. In the near future, Mythic will be deploying its technology in previously impossible-to-address use cases for robotics, autonomous vehicles, and untethered AR and VR.

Mythic has assembled an impressive group of industry experts with the hardware, software, and overall AI experience required to take its unique AI platform to market. The company is forming engagements with early-adopter customers to field test the technology, with volume shipments projected for mid-2018.

From a DCVC perspective, Mythic enables our existing AI-centric companies to deploy their innovations far more broadly than before, and opens up new frontiers “at the edge” for companies that are still just gleams in entrepreneurs’ eyes.

To learn more about Mythic, visit here: https://mythic-ai.com

Data Collective (DCVC) and its principals have backed brilliant people using deep tech to change global-scale industries for over twenty years, helping create tens of billions of dollars of wealth for these entrepreneurs while also making the world a markedly better place.

DCVC brings to bear a unique model that unites a team of experienced venture capitalists with more than 50 technology executives and experts (CTOs, CIOs, Chief Scientists, Principal Engineers, Professors at Stanford and Berkeley) with significant tenures at top 100 technology companies and research institutions worldwide. DCVC focuses on seed, Series A, and then the growth stage companies in its own portfolio. Learn more at https://www.dcvc.com and follow us on Twitter at @dcvc.